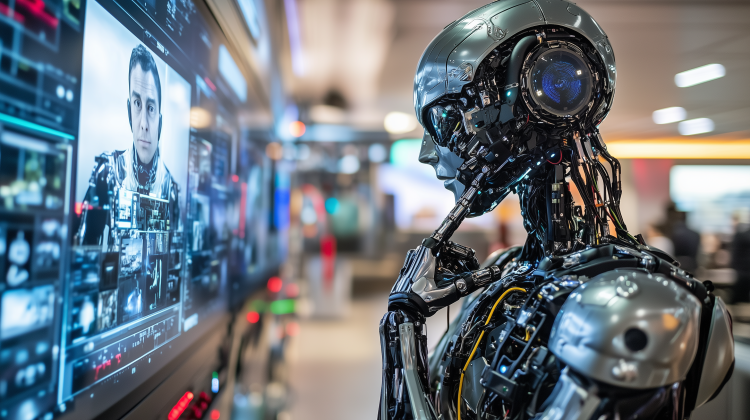

“Qwen2-VL marks a new era in AI video analysis, enabling deeper insights into long-form content across media, education, and security.”

In a major advancement for artificial intelligence, Alibaba has introduced its latest AI model, Qwen2-VL, designed to analyze and understand videos exceeding 20 minutes in length. This new model represents a significant breakthrough in video content analysis, expanding the possibilities for AI in industries like media, education, and security.

What Is Qwen2-VL?

Qwen2-VL is a vision-language model (VL), an advanced AI system that integrates visual and linguistic information to understand and interpret content. It belongs to a family of multimodal AI models, which are designed to process different types of data — such as text, images, and video — and generate coherent and relevant insights.

The “VL” in Qwen2-VL stands for Vision and Language, highlighting the model’s ability to bridge visual and textual elements within a single framework. The model is trained to analyze long-form video content, providing a deep understanding of both visual cues (such as scenes, objects, and actions) and spoken or written language (dialogue, captions, etc.). This fusion allows Qwen2-VL to grasp complex narratives, providing more nuanced interpretations than traditional single-modality models.

Key Features of Qwen2-VL

– Extended Video Analysis: One of the standout features of Qwen2-VL is its ability to handle videos longer than 20 minutes. While previous models struggled with long-form content due to the challenges of maintaining coherence over time, Qwen2-VL excels by segmenting the video into manageable parts and then stitching the analysis together to provide comprehensive insights.

– Multimodal Processing: Qwen2-VL isn’t limited to just video. It can process various forms of input data, such as images and text, making it versatile across different applications. This multimodal capacity allows it to combine video content with accompanying text (e.g., subtitles or descriptions) and audio content, resulting in a rich, contextual understanding of the material.

– Enhanced Natural Language Understanding (NLU): Qwen2-VL is equipped with advanced NLU capabilities, allowing it to understand the meaning behind dialogues, narration, or even written instructions embedded within the video. This feature makes it a powerful tool for generating summaries, captions, or even structured reports based on video content.

– Contextual Awareness: A critical aspect of Qwen2-VL’s design is its ability to maintain contextual awareness over extended durations. This means that even in long-form videos, the model can remember previous scenes or discussions, allowing it to interpret later parts of the video with deeper accuracy. This leads to more precise scene segmentation, object tracking, and dialogue comprehension over time.

How Does Qwen2-VL Work?

At its core, Qwen2-VL leverages transformer-based architectures, which are a class of deep learning models originally developed for natural language processing tasks like text translation and summarization. However, Alibaba has extended this approach to include visual data, enabling the model to understand both what’s being shown and what’s being said in the video.

To achieve this, the model undergoes rigorous training on vast datasets of both video and text. This allows it to learn associations between different video frames and corresponding language patterns, leading to cross-modal reasoning. In practice, this means Qwen2-VL can interpret not just the objects or people in a video, but also how they relate to the ongoing dialogue or context.

Real-World Applications of Qwen2-VL

Qwen2-VL’s ability to handle complex, long-form video content opens doors for various practical applications:

– Media & Entertainment: Content creators and media companies can utilize Qwen2-VL for tasks like automated video editing, generating highlight reels, or producing video summaries . The model’s ability to interpret visual and linguistic cues together also makes it ideal for content recommendation systems, where understanding both the narrative and visual elements of a video can lead to better suggestions for viewers.

– Education: With the rise of online education, platforms are generating vast amounts of video content. Qwen2-VL can assist by automating the generation of lecture notes, creating video summaries, and even translating video content into different languages for global accessibility.

– Security & Surveillance: Surveillance footage often consists of hours of video, making manual review a daunting task. Qwen2-VL can analyze long stretches of footage, identifying key moments and generating security alerts based on detected activities or objects. This makes the model invaluable for security firms looking to optimize their video analysis processes.

– Healthcare: In medical fields where video data is critical (e.g., surgery recordings or diagnostic imaging), Qwen2-VL can analyze long-duration videos to identify patterns or key events. This could support clinical reviews, training programs, and even remote consultations, where experts can quickly get to the most important parts of a video without reviewing it in real-time.

Alibaba’s AI Vision

With Qwen2-VL, Alibaba aims to push the boundaries of what AI can achieve in video and multimodal content processing. The release of this model is part of a broader strategy to create AI-driven solutions for real-world problems, from simplifying content creation to enhancing security.

Alibaba’s focus on multimodal AI systems like Qwen2-VL underscores the importance of developing models that can understand multiple types of data simultaneously. This aligns with the growing trend in AI research, which recognizes the need for more holistic models that can analyze the increasingly complex and diverse data we encounter in the digital world.

Future Outlook

The release of Qwen2-VL is just the beginning of what Alibaba envisions for the future of AI. As the demand for smarter, more versatile AI systems continues to grow, we can expect further innovations in how AI models process, understand, and generate insights from complex datasets.

From empowering content creators to revolutionizing how we handle security, Qwen2-VL’s capabilities reflect a broader movement in AI — one that seeks to enhance human efficiency and understanding in an ever-evolving digital landscape.