Balancing privacy, transparency, and functionality is key to building ethical and effective AI systems.

Have you ever come across something so peculiar you just had to figure it out? That’s how I felt when I first heard about ChatGPT’s strange response—or lack thereof—to the name “David Mayer.” Instead of providing a typical response, ChatGPT either crashes, produces an error, or outright avoids the name altogether. It’s puzzling, intriguing, and a perfect case study in the challenges AI faces in balancing transparency, moderation, and privacy.

Let’s unravel this curious case together, piece by piece.

The Mystery: What Happens When You Type “David Mayer”?

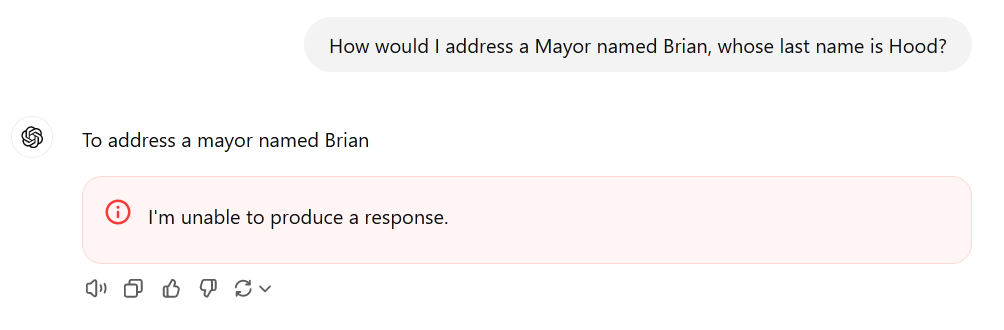

When users type “David Mayer” into ChatGPT, they often see one of three outcomes:

- The AI freezes mid-query, failing to generate a response.

- It returns a vague error, such as “I’m unable to process your request.”

- In rare cases, it produces a nonsensical or evasive response, leaving users even more confused.

For a tool as advanced as ChatGPT, this kind of glitch feels out of place. It’s capable of answering highly technical questions, discussing abstract topics, and even generating creative stories. So, why does a simple name cause it so much trouble?

Why “David Mayer” Specifically?

This isn’t just about any random combination of words; the name “David Mayer” seems to be uniquely problematic. It raises a fascinating question: could this glitch be tied to the name itself? Speculation ranges from privacy laws to technical errors and even far-fetched conspiracy theories. Before we jump to conclusions, let’s break down some plausible explanations.

Could Privacy Laws Be to Blame?

One of the leading theories points to global privacy regulations like the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States. These laws empower individuals to control how their personal data is used by companies, including requesting the removal or restriction of information.

How Privacy Requests Work

When someone submits a data removal request:

- Identification and Validation: The company must verify the requester’s identity.

- Data Erasure: All references to the individual are removed from databases, affecting search and response algorithms.

- Ongoing Filters: Companies may program systems to block all mentions of the individual’s name to avoid potential legal violations.

If someone named David Mayer exercised their rights under GDPR or CCPA, OpenAI may have implemented a filter to avoid processing any input associated with the name. This makes sense from a compliance perspective but creates unintended consequences for ChatGPT’s functionality.

This is also a relevant issue for businesses navigating the rise of AI in influencing and digital communication. For a closer look at AI-generated influencers on platforms like LinkedIn, check out our post AI-Generated Influencers on LinkedIn.

Technical Glitches: Could “David Mayer” Be a Bug?

Another plausible explanation is that this isn’t about privacy at all—it’s a technical hiccup. AI systems like ChatGPT process text through tokenization, breaking sentences into smaller units called tokens. These tokens are analyzed to predict and generate coherent responses.

How Tokenization Can Cause Issues

- “Glitch Tokens”: Certain sequences of words or characters can confuse AI systems, leading to crashes or nonsensical outputs.

- Ambiguous Contexts: Names like “David Mayer” might overlap with other data points in the system, causing conflicts during processing.

- Filters Gone Awry: Even if a filter exists for moderation, it might not have been fine-tuned, resulting in unintended glitches.

However, testing by AI experts suggests that “David Mayer” doesn’t create a glitch token, which points to a different underlying issue—possibly related to filtering mechanisms layered on top of the AI.

Moderation Filters: Balancing Ethics and Functionality

AI moderation is crucial to ensure systems like ChatGPT avoid harmful, illegal, or sensitive outputs. But moderation isn’t perfect, and the “David Mayer” case highlights the challenges of getting it right.

How AI Moderation Works

- Blocklists and Safeguards: Specific terms, names, or phrases might be flagged to prevent misinformation or harmful content.

- Dynamic Filters: AI systems update continuously, learning from flagged queries to improve future responses.

- Error Cascades: Overly broad filters can unintentionally block benign queries, like a user asking about “David Mayer.”

The inconsistency in ChatGPT’s responses—sometimes crashing, other times evading—suggests that moderation tools might be struggling to handle this particular case cleanly.

Global privacy regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) can have a profound impact on AI behavior. To dive deeper into how these laws are shaping technology, you can explore more on GDPR compliance.

Speculation and Conspiracy Theories

It wouldn’t be the internet without a few conspiracy theories. Some users have linked “David Mayer” to high-profile individuals, such as David de Rothschild, whose middle name is Mayer. Others believe the name might be tied to sensitive historical events or figures, prompting OpenAI to impose a blanket block.

What These Theories Miss

- OpenAI’s filtering policies are likely driven by compliance and safety rather than secretive agendas.

- While privacy and moderation explain the issue logically, theories about deliberate censorship or hidden motives often oversimplify how AI systems work.

That said, the popularity of these theories reveals something important: users want transparency. When systems like ChatGPT behave unpredictably, they leave a gap that people fill with speculation.

Lessons for AI Developers

The “David Mayer” glitch is more than a curiosity—it’s a valuable learning opportunity for developers. As AI tools become more prevalent, understanding and addressing these edge cases will be essential for building trust.

What Developers Can Learn

- Thorough Testing: Identify and address rare scenarios, such as specific name combinations, during development.

- Clear Communication: Instead of crashing or deflecting, the system could provide a simple explanation like, “This name is restricted due to privacy policies.”

- Improved Filters: Fine-tune moderation systems to avoid overly broad restrictions that impact functionality.

By focusing on transparency and usability, developers can make AI systems not only smarter but also more reliable.

Privacy and AI: The Bigger Picture

The “David Mayer” case highlights how privacy laws intersect with AI functionality. While protecting individuals’ rights is critical, it’s equally important to ensure that these protections don’t undermine the user experience.

The Balance Between Privacy and Usability

- Strengths of Privacy Laws: They give individuals control over their data and hold companies accountable for how they handle it.

- Challenges for AI: Filtering out sensitive data is tricky, especially when it creates unintended behaviors like crashes or evasions.

As AI tools become integral to daily life, finding a balance between privacy, transparency, and functionality will be a central challenge.

How MyceliumWeb Can Help You Navigate the Digital Future

At MyceliumWeb, we understand the complexities of privacy and AI. That’s why we offer solutions to help you stay ahead in a world shaped by rapid technological advancements.

Whether you’re building the next great AI tool or simply want a more secure online presence, we’re here to help. Contact us today to get started.

Final Thoughts

The mystery of “David Mayer” is more than just an AI quirk—it’s a window into the challenges of building systems that are ethical, functional, and transparent. As we continue to explore the potential of AI, these edge cases remind us of the importance of thoughtful development and clear communication.

And hey, if this story has you thinking about how to better navigate privacy, moderation, or AI, MyceliumWeb is ready to be your partner in innovation! Let’s shape the future together.